From the Lab

Image representation with stipples and other shapes - Introduction

Bernard Llanos — September 21, 2016 - 9:51pm

This and the following blog posts describe my undergraduate honours project (course code COMP 4905) for Fall 2016.

Project Overview

The sections below are recopied from the project proposal (created September 5, 2016):

Motivation

Stippling is an artistic style wherein dots, usually of a single colour, are the only primitives used to represent the scene. Past work by Dr. Hua Li and Dr. David Mould provides an effective method for placing stipples so as to preserve both the tone and the structure of the input image [1]. However, the method has several weaknesses: Firstly, in order to preserve tone, it will place widely-spaced stipples in light areas that should be left entirely white so as to avoid introducing texture. Secondly, light edges in the scene are lost, even if they are salient, and thirdly, in areas of very dark tone, gaps between stipples detract from the overall impression of blackness.

While some modifications to the stipple placement algorithm may be explored, in an attempt to further improve the overall appearance of the stylized images, the proposed approach will abandon the goal of representing all image features with the same primitive. Inspired by the recent surge of interest in low-polygon artwork, the final stylized images will be generated by combining stippling with polygons or other well-defined geometric shapes. Whereas stipples are ideal for simulating highly textured regions, polygons are suited for regions with little texture, especially those with extreme tones.

Objectives

As an image stylization project, the aesthetic quality of the results will be the primary focus. Images will be evaluated subjectively, with reference to principles from cognitive science and the visual arts where possible. The main objective will be to design and build a system for representing input images with stipples and geometric shapes, placed according to an algorithm that generally results in pleasing aesthetic properties. Secondary goals include outputting images in a vector format (e.g. Scalable Vector Graphics), generating a minimal amount of output data for a given level of fidelity to the input image, and allowing for an efficient implementation by minimizing algorithmic complexity and providing opportunities for parallelization.

Expected Deliverables

Algorithms

Algorithms for preprocessing input images, stipple placement, polygon formation, and optimization or post-processing of the output will be designed and presented in detail. Pseudocode will be provided in conjunction with analyses of runtime complexity, space complexity and parallelizability.

Implementation

For ease of use, a minimal graphical user interface will be implemented to allow for casual input, display, and export of image files. The Qt framework (https://www.qt.io/) provides convenient utilities for this purpose, and the primary challenge will be integrating Qt with libraries supporting the image processing, stippling and polygon construction algorithms executed through the user interface. Technologies used may include OpenCV (http://opencv.org/), the Computational Geometry Algorithms Library (http://www.cgal.org/), OpenCL (https://www.khronos.org/opencl/), and OpenGL (https://www.opengl.org/).

While the basic stippling algorithm will tentatively be selected from [1], several candidates are being considered for polygon construction. The ease with which the final algorithms can be implemented remains unknown.

Test Images

During the time remaining, following the initial implementation, a detailed investigation of the behaviour of the algorithm on a wide range of images will be conducted. Assuming the system produces reasonably attractive output on a sufficient range of natural images, some quantitative experiments will be performed as well. Experiments will study algorithm performance, output sizes, and image structure preservation, for example. Some will require generating synthetic images based on systematically selected parameters such as texture scale and strength, contrast, edge strength, steepness of colour gradients, and so forth. Data generation may be accomplished by extending the implementation, but most of it should be achievable using image editing software.

Current Progress

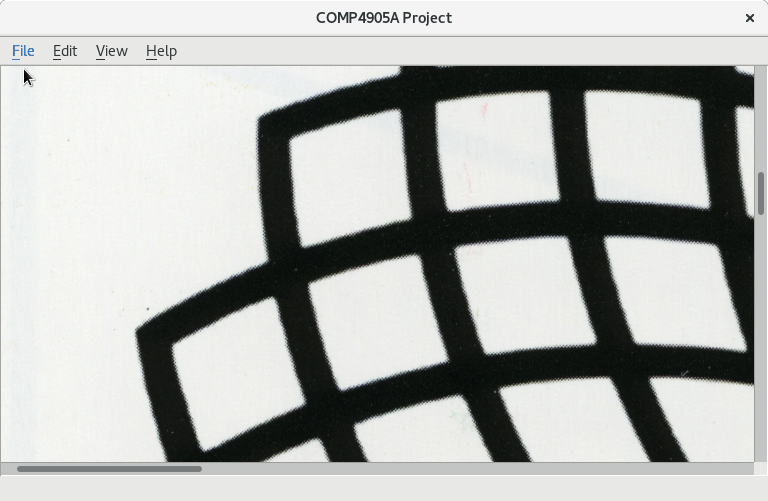

Presently, I have adapted the Qt Image Viewer Example (http://doc.qt.io/qt-5/qtwidgets-widgets-imageviewer-example.html) to create a small graphical user interface for loading image-format files, as shown below.

The image viewer can load, save, copy and paste image files.

I plan to use the image viewer as a platform for running image processing algorithms on images, to generate either raster or vector images. The following features have yet to be implemented to achieve this purpose:

- Loading and saving SVG images (according to the Qt SVG Viewer and SVG Generator examples) to provide vector image support

- Menu options for running algorithms on the currently displayed image. In the future, I may need to allow multiple images to be loaded at once, and to provide an interface for selecting input images for a given algorithm.

- Loading algorithm parameters from files. Configuration by means of text files is simpler to implement than graphical interfaces for changing parameter values for each algorithm. In particular, I plan to port configuration file reading code from a past project (https://github.com/bllanos/win32_base).

Following the development of the minimal user interface with placeholder algorithms outputting raster and vector images, I will reproduce the stippling algorithm from [1].

References

- H. Li and D. Mould. "Structure-preserving Stippling by Priority-based Error Diffusion," in Proc. Graphics Interface, 2011, pp. 127 - 134. (http://gigl.scs.carleton.ca/node/86)