From the Lab

Selecting superpixels according to salience or filter residuals

Bernard Llanos — November 18, 2016 - 6:36pm

I extended my superpixel filtering module so that it accepts an arbitrary input image representing pixel scores. Lighter pixels (lightness in the CIE L*a*b* colour space) are interpreted as more strongly preferring stippled rendering, whereas darker pixels are interpreted as more strongly preferring smooth rendering. The filter determines the average lightness value within each superpixel. Finally, it performs Otsu thresholding on the average lightness values to classify superpixels into two groups: Those which will be stippled, and those which will be rendered with smoothed colours only.

Consequently, I was free to experiment with a wide variety of methods for selecting superpixels for stippling or smooth rendering, without having to implement these methods.

Salience Detectors

Previously, I have selected superpixels for stippling when they have high-frequency textures or mid-range lightnesses, using superpixel lightness standard deviation as a proxy for these quantities. Such selection criteria are purely local, and do not reflect the high-level perceptual content of an image (i.e. the objects represented in the image). Ideally, I would like to classify superpixels as part of the "figure" or "ground" and apply stipples to the figure regions in order to emphasize the picture's key subject matter against the background.

Decomposing an image into objects, even if only to the point of a figure/ground distinction, is a difficult problem, requiring a more global analysis of the image. Machine learning/artificial intelligence and numerical optimization algorithms are commonly used as part of solutions.

As such, I was pleased to find code online implementing several image salience detectors. Sai Srivatsa R has provided MATLAB implementations of 5 salience detectors at https://github.com/saisrivatsan/Saliency-Objectness. The detectors are described in the following articles:

[1] Sai Srivatsa R, R Venkatesh Babu. Salient Object Detection via Objectness Measure. In ICIP, 2015.

[2] Wangjiang Zhu, Shuang Liang, Yichen Wei, and Jian Sun. Saliency Optimization from Robust Background Detection. In CVPR, 2014.

[3] F. Perazzi, P. Krahenbuhl, Y. Pritch, and A. Hornung. Saliency filters: Contrast based filtering for salient region detection. In CVPR, 2012.

[4] Y.Wei, F.Wen,W. Zhu, and J. Sun. Geodesic saliency using background priors. In ECCV, 2012.

[5] C. Yang, L. Zhang, H. Lu, X. Ruan, and M.-H. Yang. Saliency detection via graph-based manifold ranking. In CVPR, 2013.

I experimented with the last four detectors, as the first also requires objectness estimates as input, whereas the last four have no additional input requirements. (Objectness proposals are generated based on training data. I wish to avoid using training data, as training data is a significant overhead, and is equivalent to a huge number of obscure parameters.)

Salience Detection Results

The following series of figures illustrate the output of the salience detectors on two images from the NPR benchmark (http://gigl.scs.carleton.ca/benchmark_npr_general). The detectors performed better on most of the other images in the benchmark. However, in this post, I wish to focus on problems with my method, and so will not include any good results.

Salience maps were produced with the code from Sai Srivatsa R, in the state of commit 785a9f75659edcd0305213641235cecea30900d7. In left-to-right, top-to-bottom order, they correspond to the output of references [2], [3], [4], and [5] listed above:

Image 'arch1024'

Original Image

Salience Maps

![Arch image salience map produced with the method from [2]](/sites/default/files/uploads/images/bllanos_20161118_arch1024_wCtr_Optimized.png)

![Arch image salience map produced with the method from [3]](/sites/default/files/uploads/images/bllanos_20161118_arch1024_SF.png)

![Arch image salience map produced with the method from [4]](/sites/default/files/uploads/images/bllanos_20161118_arch1024_GS.png)

![Arch image salience map produced with the method from [5]](/sites/default/files/uploads/images/bllanos_20161118_arch1024_MR_stage2.png)

This is a challenging image for salient object detection, because the rocks are the features of interest, yet they occupy so much of the image that they appear to form its background.

I chose to use the inversion of the output of [3] (the Saliency Filter method), shown below, as the input for superpixel rendering decisions.

![Arch image salience map produced with the method from [2], with colours inverted](/sites/default/files/uploads/images/bllanos_20161118_arch1024_SF_inverted.png)

Image 'athletes1024'

Original Image

Salience Maps

![Athletes image salience map produced with the method from [2]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_wCtr_Optimized.png)

![Athletes image salience map produced with the method from [3]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_SF.png)

![Athletes image salience map produced with the method from [4]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_GS.png)

![Athletes image salience map produced with the method from [5]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_MR_stage2.png)

This image is also quite challenging. If one selects salient areas based on fine detail, one would exclude the smooth skin tones. On the other hand, if one selects salient areas based on bright colours, one would include the blurry background.

Stylization Results using Salience Detectors

Image 'arch1024'

Using the inverted salience map output by the Salience Filter method [3], I obtained the following stylization:

![Stylization of the rock arch image, using the inverted salience map from [2]](/sites/default/files/uploads/images/bllanos_20161118_arch1024_k500_SF_inverted_smooth.png)

Aside from the effect of the unsatisfactory saliency map - stipples in the sky - there are undesirable pink blobs above the rock wall. The pink blobs are a side effect of imperfect segmentation. Some superpixels in the sky crossed the border between the sky and the rocks. The border crossings are shown in the image below, which was created by blending SLIC superpixels [6], rendered with their average colours, on top of the original image.

Perhaps I can resolve the colour bleeding problem by rendering each pixel with a weighted average of the mean colours of its superpixel and of the adjacent superpixels. An adjacent superpixel colour's weight would be inversely proportional to the differences between it and the average colour of the source pixel's superpixel. The weight would also be inversely proportional to the distance of the source pixel from the adjacent superpixel. Of course, there are some possible side-effects of such an approach:

- Depending on parameter settings, it may smooth over superpixel boundaries that lie along strong edges in the image, eliminating the strong edges

- It would be more computationally demanding because it requires more distance measurements than only the distance of the given pixel to the closest superpixel border in any direction

Note that blending the average colours of multiple superpixels at each pixel is equivalent to using a soft segmentation of the image, as opposed to using a hard segmentation. Therefore, a soft segmentation may be more appropriate as the input for rendering.

Image 'athletes1024'

Using the inverted salience map output by the Geodesic Salience method [4], I obtained the following stylization:

![Stylization of the athletes image, using the salience map from [4]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_k500_GS_smooth.png)

The salience map from the Saliency Filter method [3] leads to much more conservative stipple placement:

![Stylization of the athletes image, using the salience map from [3]](/sites/default/files/uploads/images/bllanos_20161118_athletes1024_k500_SF_smooth.png)

The above images illustrate that faces are poorly abstracted by the stylization method. Small faces in particular tend to be either too coarsely stippled or smeared, when subject to stippling or smooth rendering, respectively. The human visual system is quite critical of abstraction effects that distort faces, whereas it may not notice crude abstraction effects in less familiar or less salient areas, such as the grass field in this example.

Cumulative Range Geodesic Filtering

I also tested the Cumulative Range Geodesic Filter for superpixel classification. The Cumulative Range Geodesic Filter is an edge-preserving smoothing filter [7], and so can provide estimates of blurriness for the purposes of stippling regions with high-frequency detail. I used the following residual image provided by Dr. David Mould as the input for superpixel classification:

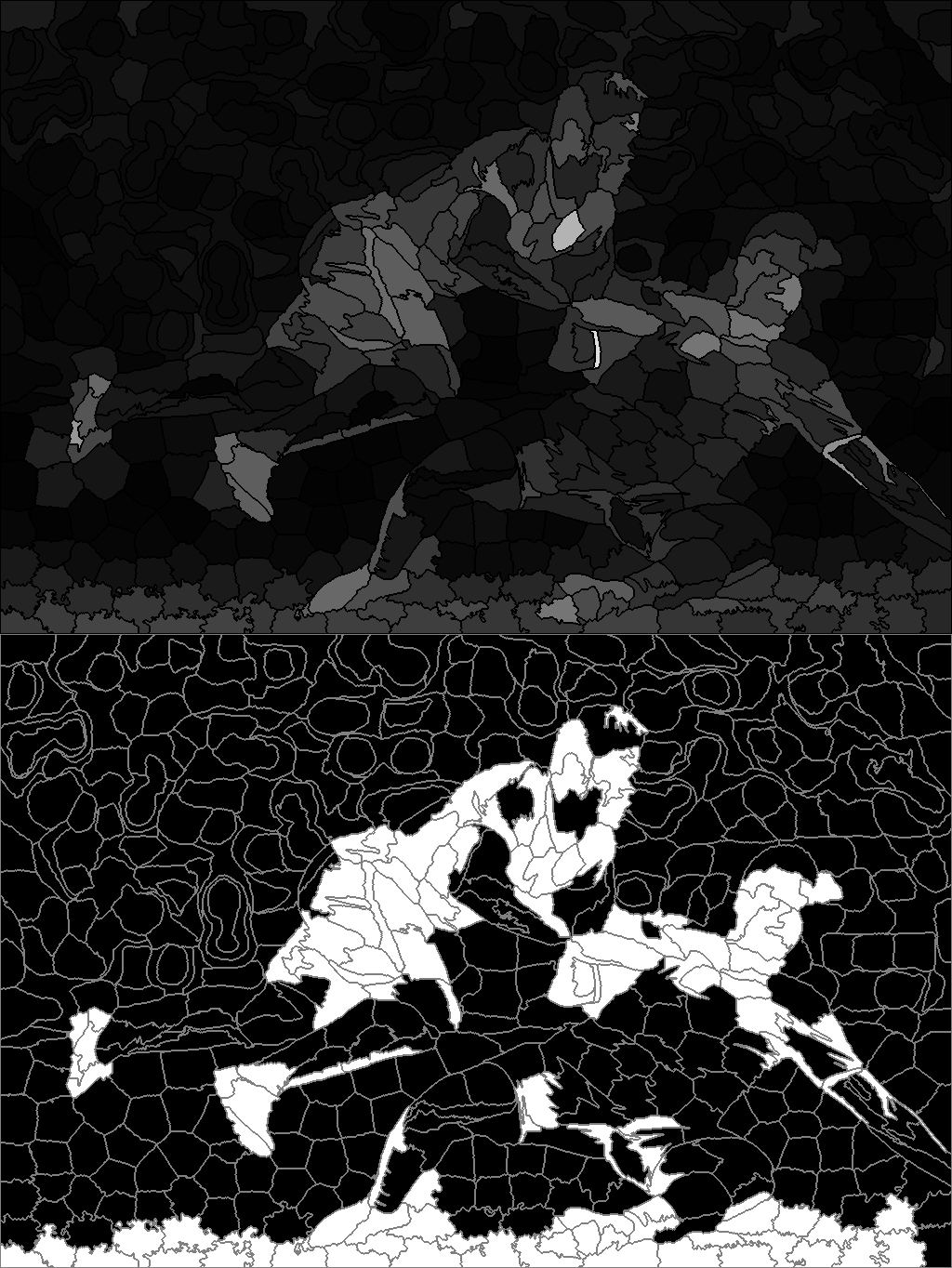

The resulting superpixel scoring (top half) and Otsu thresholding of superpixel scores (bottom half) is as follows:

The final stylization result is arguably more appealing than when generated using the output of the salience detectors for superpixel classification:

Additional References

[6] R. Achanta et. al. "SLIC superpixels compared to state-of-the-art superpixel methods." IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 11, pp. 2274-2281, Nov. 2012. (Authors' website for SLIC: http://ivrg.epfl.ch/research/superpixels)

[7] D. Mould. "Image and Video Abstraction using Cumulative Range Geodesic Filtering." Computers & graphics, 2013, vol. 37, no. 5, pp. 413-430, Aug. 2013. (Available on this website at http://gigl.scs.carleton.ca/node/481)